If you think I'm a good fit for an open position or you just want to say hello, send a message! I look forward to hearing from you.

Purpose and Goal

The Wormbot system was developed by the Meng Wang Lab at the Howard Hughes Medical Institute's Janelia Research Campus (Janelia). Wormbot supports experiments that require taking images of many nematodes over their lifetimes to study mechanisms of organism aging.

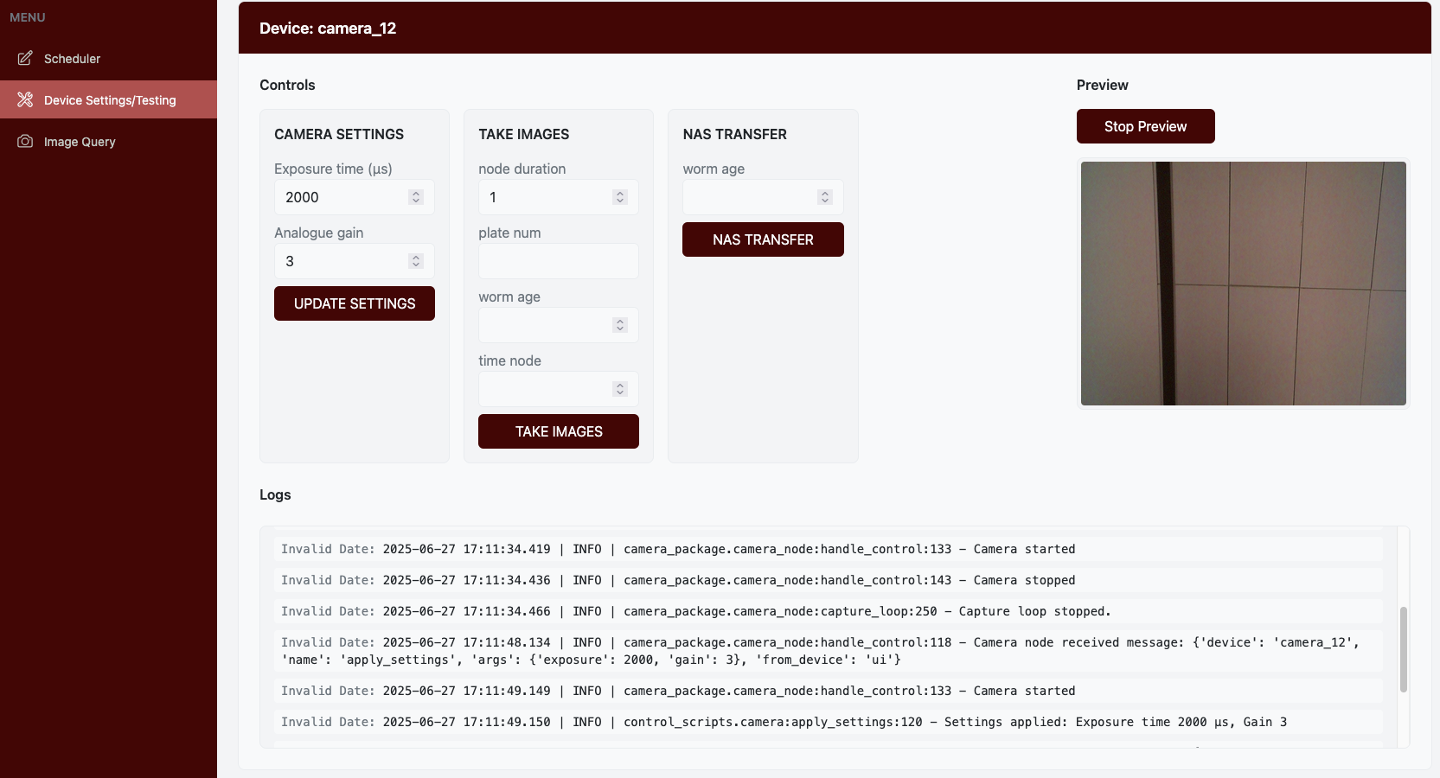

I was brought onto the project to provide software support; specifically, I was asked to develop a web interface that scientists could use to control the imaging experiments. In addition, I supported the lab in achieving their goal of designing a scalable system, consulting with colleagues in my department to identify and implement a ROS-based messaging system amongst the UI and the cameras, robot, and storage hardware involved in the system.

Core requirements

- Provide an intuitive web interface – Allow biologists with varying technical backgrounds to schedule experiments and control hardware without requiring command-line expertise or custom coding.

- Enable scalability and flexibility – Design a system architecture that could easily accommodate additional hardware components as experiments scale up.

- Prioritize accessible hardware – Leverage affordable components like Raspberry Pi computers and cameras to keep costs manageable for labs, and make setting up the software on these components as easy as possible.

Hardware Architecture

The basic features of the setup includes:

- "Hotel" storage with slots for six-well agar plates

- Arducam cameras, each attached to a Raspberry Pi 5

- A robot controlled by a seventh Raspberry Pi 5, that transports plates between hotel storage and the imaging platform

- A scheduler node and ROS bridge server running on another computer on the same local network

- A Synology network-attached storage (NAS) device for image storage

Software Architecture and ROS Implementation

Why ROS?

After evaluating options for inter-device communication, I selected the Robot Operating System (ROS) framework. My work builds on a system developed by my colleague David Schauder for the Tervo Lab at Janelia. ROS offered several advantages over alternatives like web sockets:

- Scalability – ROS nodes automatically discover other nodes on the same network within the same ROS domain. Nodes advertise their presence on startup, shutdown, and periodically while running, eliminating the need to manually configure IP addresses when adding hardware.

- Robustness – If a device loses network connection and is assigned a new IP address upon reconnection, ROS nodes can be rediscovered automatically, whereas web socket connections would break.

- Flexibility – The automatic node discovery enables different labs to configure nodes across whatever hardware setup meets their needs, whether consolidating all nodes on one workstation or distributing them across multiple Raspberry Pis.

System Components

The software system consists of:

- Camera Python ROS nodes – control image capture

- Robot Python ROS node – controls plate movement

- Scheduler Python ROS node – manages experiment scheduling and workflow

- ROS Bridge server – enables communication between the web UI and ROS nodes

- FastAPI servers – provide REST endpoints for file browsing and log viewing

- Web UI – React app allows users to configure and monitor experiments

All ROS nodes run in Docker containers built on a base ROS Humble image.

Key Technical Achievements

Streamlining Hardware Control Development Through Dynamic Function Discovery

One of the most significant technical achievements was creating a system that allowed the embedded hardware developer on the team to rapidly develop and test robot control functions without requiring me (the UI developer) to manually update interface code each time.

I implemented a function discovery pattern where:

-

Hardware-specific functions are defined in a

control_scripts/directory within each node package -

The

__init__.pyfile imports these functions and uses Python's introspection capabilities to extract function names, parameter names, parameter types, and default values - During node initialization, this metadata is published via JSON messages to a ROS "topic" that the UI subscribes to

- The UI dynamically generates control interfaces based on the discovered functions

This pattern meant new robot control functions could be added by:

-

Writing the function in

control_scripts/robot.py -

Adding its name to the

valid_functionsdictionary in__init__.py - Restarting the robot Docker container

The testing page in the UI would automatically display the new function with appropriate input fields based on the parameter types. This dramatically accelerated the development cycle and allowed the embedded hardware developer to focus on hardware control rather than UI implementation.

Containerization and Deployment Strategy

To streamline development, deployment, and maintenance across multiple Raspberry Pis, I designed a containerization strategy addressing three key needs: rapid development iteration, automated NAS connectivity, and one-command deployment to production hardware.

Development Mode with Live Code Reloading

For development, I created a dev.docker-compose.yml configuration that uses bind mounts to map the local package directory into the container, allowing a developer to edit Python files on the Pi's filesystem and see changes reflected immediately.

The development setup uses watchmedo from the Python watchdog library to monitor the mounted directory for changes. When a .py file is saved, the watcher automatically kills and restarts the ROS node process, loading the updated code without requiring a container rebuild. This dramatically speeds up the development cycle—developers can test hardware control functions and debug issues in seconds rather than waiting minutes for image rebuilds.

Automated SSH Key Management via Docker Volumes

Managing SSH authentication for NAS transfers across multiple Raspberry Pis presented a significant operational challenge. I solved this by combining Docker named volumes with an entrypoint script that handles SSH key lifecycle management.

Each node's docker-compose file defines a named volume wormbot_ssh_keys that's mounted to /root/.ssh inside the container, persisting across container restarts and updates. The entrypoint script runs before the main ROS node process and implements conditional logic: if SSH keys don't exist in the volume, it generates a new key pair and uses sshpass to copy the public key to the NAS using credentials from the .env file. If keys already exist, it simply updates the known_hosts file and proceeds.

This approach provides one-time setup (SSH keys are generated only on first container startup), persistence (keys survive container updates), full automation (no manual intervention beyond the initial NAS password), and security (private keys never leave the container or volume).

Streamlined Production Deployment via Install Script

To make deploying Wormbot to new hardware as simple as possible, I created a deployment script (wormbot-install.sh) hosted on an internal Janelia web server, allowing users to deploy any node type with a single command:

curl -fsSL https://host.org/wormbot-install.sh | \

sudo DEVICE=camera_12 TYPE=camera bash

The script handles the complete deployment workflow: validates inputs, pulls the latest image from Janelia's Docker registry, creates the directory structure, generates an .env file with device ID and NAS credentials, extracts the production docker-compose.yml from the image, and starts the services.

This approach provides minimal setup requirements (users only need Docker and environment variables), version control (the docker-compose configuration is embedded in the versioned image), flexibility (environment variable overrides for custom configurations), and validation (built-in error checking for common mistakes).

Current Status and Future Work

By combining live code reloading for development, automated SSH key management, and one-command deployment, I created a system that's developer-friendly during active development and operationally simple for production deployment. Researchers can set up a new node in minutes with a single command, while developers can iterate on hardware control code in seconds.

The system is operational and has received positive feedback from test users. Current development focuses on:

- Conducting the first full-scale experiment with multiple plates

- Scaling to multiple robot/hotel setups

- Adding scheduler flexibility for more complex imaging patterns

- Implementing additional UI features for experiment monitoring

- Exploring ROS services in addition to topics for request/response interactions

The modular architecture and careful attention to documentation position Wormbot well for adoption by other research labs seeking to automate nematode lifespan studies.